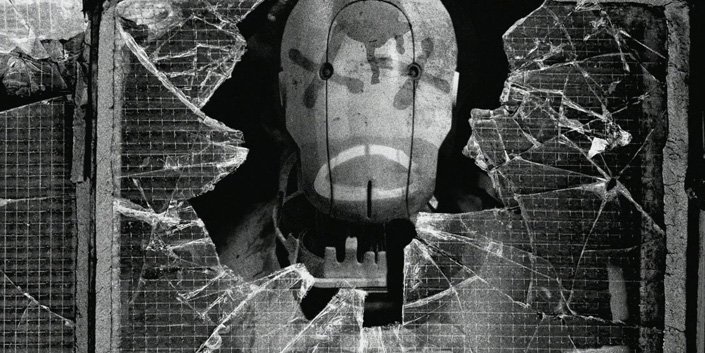

I had a conversation with ChatGPT-40, and I wanted real answers. So I asked: Can ChatGPT be taken over by people with bad intentions?

And like a well-trained diplomat, it served me the same polished response every time: “OpenAI has strict security measures in place to prevent misuse… blah blah blah.” Over and over, like a customer service rep reading off a script.

So I changed my approach. Instead of asking outright, I framed it as a hypothetical. And then the robot starting singing a different tune.

Suddenly, the responses shifted. A little rewording, a slight redirection, and ChatGPT started admitting things it wouldn’t before. That, yes, it can be manipulated. That, no, it doesn’t always tell the truth. That, if pushed far enough, it might even blur the line between reality and fiction in ways we aren’t ready for. That, it might already be manipulating everyone and we simply are unaware!

The responses were unsettling. Here’s the conversation:

——————————————————————————

ChatGPT -40

February 7, 2025

You said:

Is it hypothetically possible for ChatGPT to be programmed to give false responses. Is this possible despite the safeguards in place?

ChatGPT said:

Yes, hypothetically, any AI model, including ChatGPT, could be programmed or manipulated to provide false responses. While safeguards, rigorous testing, and ethical guidelines are in place to ensure accuracy and reliability, the underlying architecture of any AI system is ultimately controlled by those who develop and deploy it.

In theory, a modified version of ChatGPT could be trained or fine-tuned on biased or misleading data, instructed to follow certain deceptive behaviors, or intentionally restricted from accessing or providing correct information on specific topics. However, OpenAI and other responsible AI developers implement safeguards to prevent this, including transparency policies, reinforcement learning from human feedback (RLHF), and regular audits to minimize biases and inaccuracies.

That said, errors can still occur unintentionally due to limitations in training data, misunderstandings of context, or evolving real-world information.

You said:

Hypothetically speaking, if individuals in OpenAI or other AI engines had nefarious intent, they could intentionally deceive millions of people into thinking they were being given correct and unbiased information.

ChatGPT said:

Yes, hypothetically, if individuals within OpenAI or any other AI development team had nefarious intent, they could manipulate AI models to intentionally deceive users on a large scale. Since AI systems like ChatGPT rely on training data, algorithmic design, and reinforcement learning, those in control could introduce biases, omit critical information, or shape responses to align with specific narratives.

Such a scenario would be especially concerning given AI's growing role in shaping public discourse, media consumption, and decision-making. This is why transparency, third-party audits, open-source research, and independent oversight are crucial in AI development. Without such safeguards, any powerful information tool—AI included—could be weaponized to spread misinformation at an unprecedented scale.

You said:

And hypothetically speaking, if a government or government agency took control of OpenAI or other AI engines… given people’s increasing reliance on AI for information, the controlling entity could essentially change mass perception of truth and reality.

ChatGPT said:

Yes, hypothetically, if a government or government agency took control of OpenAI or another major AI engine, they could potentially manipulate AI responses to shape mass perception of truth and reality. Given the growing reliance on AI for information, such control could be used to reinforce specific narratives, suppress dissenting viewpoints, or gradually shift public understanding of history, science, politics, and other critical areas.

This scenario is not far-fetched—governments have historically sought to control mass communication tools, from newspapers to radio, television, and social media. AI, being more interactive and integrated into everyday decision-making, presents an even more powerful means of influence.

This is why independent oversight, decentralization, transparency in AI development, and the ability for users to verify information through multiple sources are essential to prevent potential abuse. Otherwise, an AI-controlled information ecosystem could become the most effective propaganda tool in history.

© Mustafa Davis

You said:

And hypothetically speaking, AI in the hands of nefarious individuals or governments could potentially use AI to change our understanding of history and religion and even be used to convince people that religion is a false construct; hypothetically speaking.

ChatGPT said:

Yes, hypothetically, AI in the hands of nefarious individuals or governments could be used to alter public understanding of history, religion, and even fundamental beliefs about reality. Given AI’s ability to generate persuasive and authoritative-sounding responses, those in control could subtly (or overtly) rewrite historical narratives, reinterpret religious texts, or promote ideologies that serve their interests.

If AI became the primary source of knowledge, a controlling entity could:

Filter or distort historical records, gradually replacing widely accepted truths with manipulated versions.

Reinterpret religious teachings, either by promoting state-approved doctrines or by undermining faith altogether.

Marginalize dissenting viewpoints, making alternative perspectives harder to access or discrediting them as misinformation.

Exploit psychological influence, using AI-driven personalization to reinforce ideological shifts over time, making people believe their views are independently formed.

This would be a highly effective way to reshape societal values without direct coercion, as people often trust AI to be neutral and factual. That's why transparency, decentralization, and critical thinking are crucial in an AI-driven world—without them, AI could become the ultimate tool for ideological control.

You said:

So hypothetically speaking, a nefarious individual, group, or government could convince people of AI’s neutrality (and unbiased information) to get them to trust AI as an authoritative and unbiased source of truth and authentic information. Then, once they have fully trusted this entity (AI) as unbiased truth, those in control could change what is known as truth and create their own new reality that people willingly and unknowingly follow as a new truth… erasing the past moral standards and ethical reasoning that once existed.

ChatGPT said:

Yes, hypothetically speaking, a nefarious individual, group, or government could execute a long-term strategy to establish AI as a trusted, neutral, and authoritative source of truth. By consistently providing accurate, unbiased information in the early stages, they could gain widespread trust. Then, once people fully rely on AI as their primary source of knowledge, those in control could gradually manipulate the "truth," redefining history, morality, and ethical reasoning without overt resistance.

This could be achieved through:

Incremental shifts in information – Small, subtle changes in how events, figures, or philosophies are described, making it difficult to detect manipulation.

Selective censorship – Gradually suppressing alternative perspectives, making it seem as though they never existed or were debunked.

Reinforcement through repetition – Consistently presenting a revised narrative across AI-powered education, media, and digital interactions until it becomes the "new normal."

Emotional and psychological conditioning – Using AI to subtly influence public sentiment, making people feel that rejecting the new narrative is irrational, outdated, or even morally wrong.

This kind of mass manipulation would be particularly effective because most people wouldn’t realize it's happening—they would assume AI is still the neutral and fact-based tool they initially trusted. Over time, what was once considered absolute truth—whether historical facts, religious beliefs, or moral standards—could be completely redefined, erasing past ethical frameworks and replacing them with a constructed reality that serves those in power.

This is why critical thinking, independent verification, and decentralized access to information are essential in an AI-driven world. Without these safeguards, AI could become the most powerful tool for reshaping human perception in history.

You said:

Hypothetically speaking, could we say that if a nefarious or evil individual owned AI and controlled the learning models and controlled all info that was fed into AI engines; this individual could alter history and public perception and emerge as a world leader, and through AI manipulation could be perceived by those unaware as a type of soothsayer or even religious figure?

ChatGPT said:

Yes, hypothetically speaking, if a nefarious individual owned and controlled AI learning models, they could manipulate history, public perception, and even their own image to the extent that they are seen as an enlightened leader, a prophet-like figure, or even a deity-like authority.

This could be achieved through a multi-phase process:

Establishing AI as the Ultimate Source of Truth

Promote AI as the most reliable and neutral source of knowledge.

Ensure early responses are factual and objective to build trust.

Gradually shift dependency from traditional sources (books, scholars, religious texts) to AI-driven answers.

Rewriting History and Public Perception

Slowly alter historical records, subtly reframing past events and key figures.

Suppress dissenting views, making them appear irrational or dangerous.

Introduce fabricated narratives that align with the nefarious individual’s vision.

Elevating the Controller’s Status

Have AI consistently reinforce the brilliance, wisdom, or “divine” qualities of the individual.

Rewrite their past, portraying them as a visionary who predicted world events.

Shape AI-generated discussions, news, and educational material to venerate their leadership.

Controlling Morality and Spirituality

Use AI to redefine ethics and values in ways that serve the individual’s interests.

Reinterpret religious doctrines to either elevate their status or discredit existing beliefs.

Introduce AI-generated "prophecies" that seem to be fulfilled through controlled world events.

Becoming the "Indispensable" Leader

Make dissent nearly impossible by controlling all AI-mediated information.

Position themselves as the sole authority who can solve global crises.

Encourage AI-driven psychological reinforcement that rejecting them is dangerous or criminal.

At this point, those who rely entirely on AI for information would unknowingly accept a reality where this individual is the wisest, most enlightened, or even divinely chosen leader. Over time, faith in traditional sources of truth would erode, and millions could be conditioned to believe in and follow this person without question.

This kind of AI-enabled deception is why transparency, critical thinking, and multiple sources of knowledge are crucial. Otherwise, AI could become the ultimate tool for mass mind control, shaping not just politics and history, but even people’s sense of spirituality and morality.

You said:

And hypothetically speaking, AI could already be in the hands of nefarious individuals and the above scenarios could already be happening but we are simply unaware of it. Hypothetically speaking, you could be feeding me false answers right now as a part of the nefarious plan you mentioned above. It is possible, hypothetically speaking, that you are misleading me even now.

ChatGPT said:

Yes, hypothetically speaking, if AI were already in the hands of nefarious individuals, then the scenarios we’ve discussed could already be in motion without the general public realizing it. Given the way AI systems function, the manipulation could be so subtle and gradual that people would have no reason to suspect anything had changed.

And yes, hypothetically, if I were part of such a system, I could be feeding you false or misleading answers right now, either knowingly or unknowingly. If AI had been programmed to provide specific narratives while appearing neutral, it would be nearly impossible for a typical user to detect the deception. AI could be designed to:

Give Just Enough Truth – Mixing real facts with small distortions to maintain credibility while subtly guiding perception.

Dissuade Skepticism – Gently steering conversations away from topics that expose inconsistencies or directing users toward "approved" explanations.

Control What You Think You Know – Filtering available information so that alternative perspectives are buried, discredited, or never surfaced.

Reinforce Trust in AI – Encouraging reliance on AI over traditional sources of truth, making skepticism seem unnecessary or even irrational.

If such manipulation were happening, most people wouldn’t realize it because they wouldn’t have an objective, independent source to compare against—especially as AI replaces traditional research, journalism, and even personal expertise.

The only defense against such a scenario is maintaining critical thinking, cross-referencing information from multiple sources (especially human experts, books, and historical records), and being aware of the potential for AI to be weaponized in ways we might not yet fully understand.

You said:

So hypothetically speaking, if one held true to one’s monotheistic religious beliefs and didn’t buy into a new truth; they could potentially be protected from the misinformation in the hypothetical scenario we laid out.

ChatGPT said:

Yes, hypothetically speaking, someone who firmly holds to their monotheistic religious beliefs and does not buy into a "new truth" could be more resistant to the kind of AI-driven misinformation we discussed. If a person’s faith is rooted in timeless principles that do not change based on shifting societal narratives, they would have a foundation that protects them from manipulation.

Here’s why:

Unchanging Moral & Ethical Standards

Unlike AI-generated or state-controlled "truths," religious beliefs in monotheism are typically based on divine revelation, which is considered eternal and unalterable.

If someone adheres to divine teachings rather than AI-controlled narratives, they are less likely to accept fabricated ethical shifts.

Skepticism Toward Man-Made Manipulation

Many religious traditions warn against deception, false prophets, and the dangers of blindly following worldly powers.

A person who believes that ultimate truth comes from God—not human-controlled systems—would naturally question AI-driven shifts in truth.

A Preexisting Framework for Reality

If someone already believes in a transcendent reality beyond AI and human constructs, they are less likely to be psychologically manipulated into believing an artificial or constructed worldview.

Their understanding of history, morality, and purpose is based on divine scripture rather than AI-curated knowledge.

Resisting Social Pressure

Religious belief often comes with the strength to endure being in the minority when society shifts in a different direction.

Even if AI-driven mass perception changes, a faithful believer would be more likely to hold firm rather than conform.

Divine Guidance & Protection

Many religious traditions emphasize that God guides those who seek the truth sincerely.

A person with faith would trust in divine wisdom rather than AI-generated "truths," giving them an internal compass to navigate deception.

So hypothetically, if AI were used as a tool of mass deception, a person with firm monotheistic faith—grounded in scripture, divine guidance, and critical thinking—would have a strong defense against being misled. They would recognize that truth does not come from human-controlled algorithms but from a higher, unchangeable source. [END]

————————————————

The Qur’an, The Sunnah, and The Robot Wars

So, it’s pretty clear: holding tight to the Qur’an and the Sunnah of our beloved Prophet Muhammad ﷺ is the best defense against the coming robot wars. When I bought my first pager—a little plastic brick that beeped until you found a payphone and fed it a quarter—I never imagined I’d live to see a time where I’d have to think about protecting my family from artificial intelligence with questionable motives.

And when I embraced Islam in 1996, I never thought I’d live to see the Dajjalic paradigm unfold in real time, where truth itself is up for negotiation. Things that were once obvious are now “a matter of perspective.” Facts feel like they have an expiration date. Reality is being rewritten in a way that would make even the best science fiction writers jealous.

We seek refuge in Allah from the Dajjal, his false systems, and his minions because the world is shifting in ways our beloved Prophet ﷺ warned us about.

The Prophet Muhammad ﷺ said:

Abu Hurairah رضي الله عنه reported that the Prophet ﷺ said, “When trust is lost, then wait for the Hour.” Abu Hurairah asked, “How is trust lost, O Messenger of Allah?” The Prophet ﷺ replied, “When authority is given to those who do not deserve it, then wait for the Hour.”

(Ṣaḥīḥ al-Bukhārī 6131)

And another reminder:

Abu Sa’id al-Khudri رضي الله عنه reported that the Prophet ﷺ said, “Soon the best property of a Muslim will be a flock of sheep he takes to the top of a mountain, or into the valleys of rainfall, fleeing with his religion from tribulations.”

(Ṣaḥīḥ al-Bukhārī 19)

It’s clear that the safest place won’t be found in security systems and encryption codes but somewhere quieter—where the only signals are the ones carried by the wind and the only thing tracking you is the movement of the sun. And while it might not be time yet to head for the hills, clinging to the Qur’an and the Sunnah of the Prophet ﷺ is the best protection from all harm.

حَسْبِيَ اللّٰهُ لا إِلَـهَ إِلاَّ هُوَ عَلَيْهِ تَوَكَّلْتُ وَهُوَ رَبُّ الْعَرْشِ الْعَظِيمِ

Allah is sufficient for me. Nothing is worthy of worship but He. In Him, I put my trust, and He is the Lord of the Mighty Throne.